FluidFS and other file systems break large files into recipes of hash-identified blobs of binary data. Blobs can then be replicated with far more ease than a single file, as well as streamed from disk in a memory safe manner. Blobs are treated as single, independent units so the underlying data store doesn’t grow as files are duplicated. Finally, blobs can be encrypted individually and provide more opportunities for privacy.

Chunking files into blobs is a good idea.

The question then becomes, how do you meaningfully chunk a file? The most obvious thing to do is simply stride across a file by some block size, generating fixed length chunks. This poses one problem for the last chunk - what if it’s only a byte or two? We can slightly modify our algorithm to specify a minimum chunk size, and if the remainder is smaller than that size, append it to the last chunk to have a larger than block size piece.

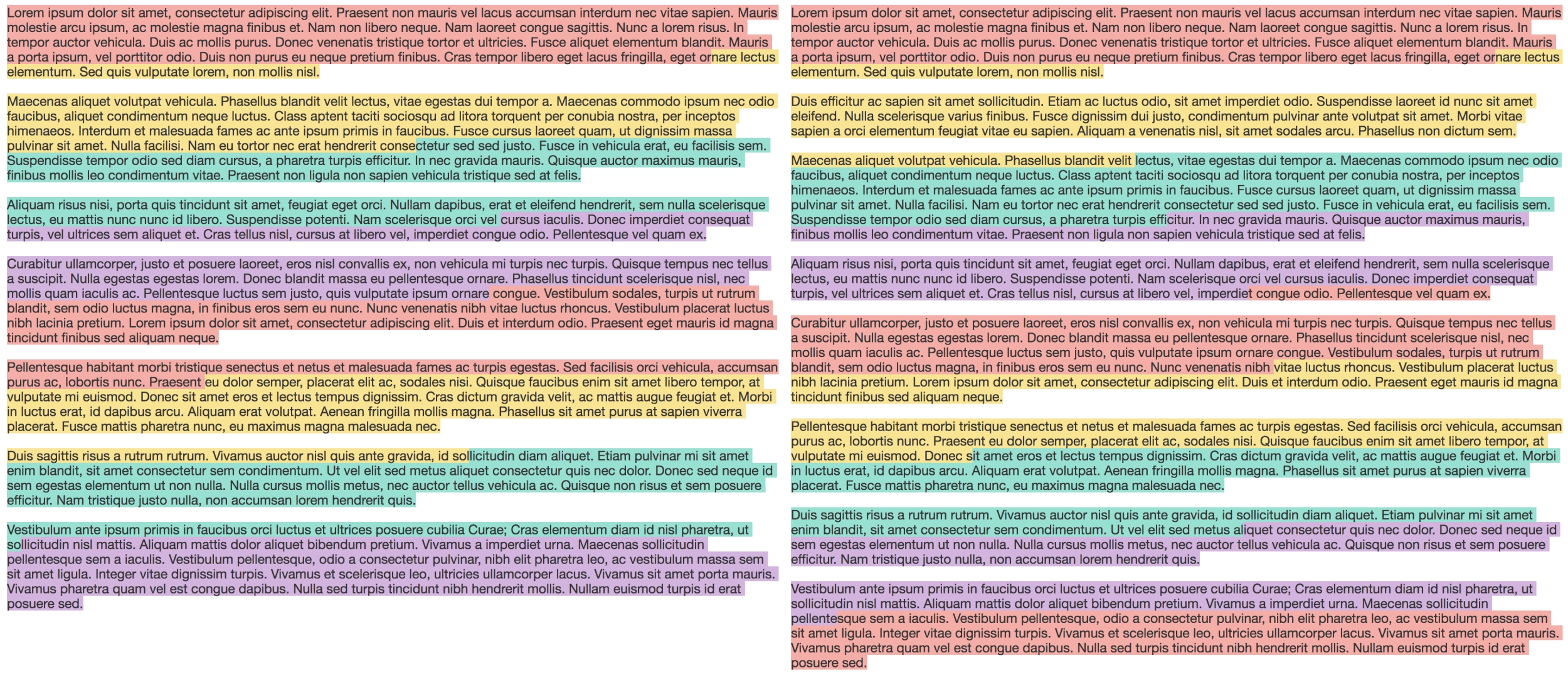

Fixed length chunks of 512 bytes and a minimum blocksize of 92 bytes highlighting an original and updated file. When the file is updated, all chunks after the first are modified.

In the above figure each blob created by fixed length chunking is highlighted in a different color. The file is divided into even, well formed chunks – however a problem occurs when the file is updated. By inserting a paragraph in between the first and second paragraphs, the chunking algorithm shifts all subsequent chunks; in fact no chunk following the first chunk is preserved. Simple, small updates so radically change the blobs that duplication becomes a large issue.

Variable length chunking uses the content to determine the splits between blocks by scanning for a specific pattern. Because it breaks up the blobs on pattern identification, the blobs don’t have a uniform length. Rabin-Karp chunking using a rolling hash across windows to identify the splits, and is the primary chunking mechanism used in FluidFS.

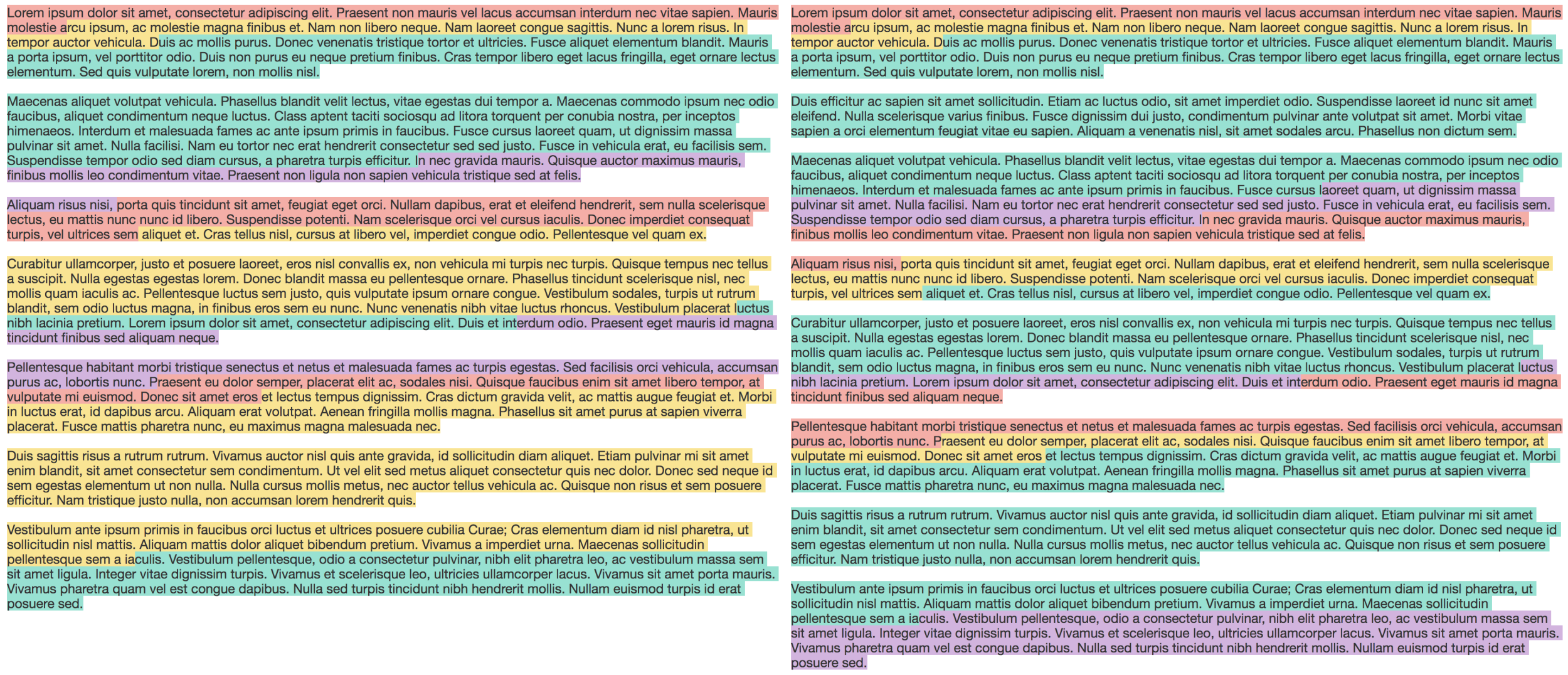

Rabin-Karp variable length chunks with a target block size of 512 bytes highlighting an original and updated file. When the file is updated, only the chunks surrounding the update are modified.

In the above figure you can see that the variable length chunks can be quite small or quite large. However, the key is that when the second paragraph is inserted into the document, only the second chunk is modified. A third chunk is added, but all other chunks are identical. In this way variable length chunking reduces the number of overall blobs that have to be replicated and stored.

The visualization method can be found at this gist. The offsets were generated using the FluidFS chunks debugger.